The Problem

Mobile and casual game soundtracks tend to be limited by technical constraints and development costs. Generally, they consist of some background music, and a few sound effects. These might be created by a composer and a sound designer, or a producer may have simply pulled tracks off library CDs.

Either way, it is not unusual for there to be little, if any, coordination between the music and the sound effects. This isn't an issue for non-tonal sounds, such as gunfire or derisive pig grunts, but can be problematic when the effects are musical in nature, such as the "ding!" of a pinball bumber, or a "ta-da!" chord when the level is completed. A bonus sound consisting of an Eb Major chord will sound painfully discordant if the background music happens to be in A minor.

A Solution

The Secret Yanni Technique (see footnote) can be used to avoid this problem, by writing background music in a specific mode, then producing sound effects to fit that mode. Here's a simple example:

Compose music in the key of C Major, then create bonus sounds using a C pentatonic scale (C, D, E, G, A).

However, this solution has its own problem: In the non-linear interactive world of a game level, a bonus sound can be played at any time during gameplay. Therefore, harmonic movement (i.e. key changes) must be strictly limited; otherwise, the bonus sounds will inevitably clash with the music.

A Better Solution

BUT what if the game's audio engine kept track of what key was playing, and generated bonus sounds to match? I wanted to demonstrate this approach, and so wrote a game for the Android platform called LandSeaAir that uses the FMOD Interactive Music System to do just that.

Mobile and casual game soundtracks tend to be limited by technical constraints and development costs. Generally, they consist of some background music, and a few sound effects. These might be created by a composer and a sound designer, or a producer may have simply pulled tracks off library CDs.

Either way, it is not unusual for there to be little, if any, coordination between the music and the sound effects. This isn't an issue for non-tonal sounds, such as gunfire or derisive pig grunts, but can be problematic when the effects are musical in nature, such as the "ding!" of a pinball bumber, or a "ta-da!" chord when the level is completed. A bonus sound consisting of an Eb Major chord will sound painfully discordant if the background music happens to be in A minor.

A Solution

The Secret Yanni Technique (see footnote) can be used to avoid this problem, by writing background music in a specific mode, then producing sound effects to fit that mode. Here's a simple example:

Compose music in the key of C Major, then create bonus sounds using a C pentatonic scale (C, D, E, G, A).

However, this solution has its own problem: In the non-linear interactive world of a game level, a bonus sound can be played at any time during gameplay. Therefore, harmonic movement (i.e. key changes) must be strictly limited; otherwise, the bonus sounds will inevitably clash with the music.

A Better Solution

BUT what if the game's audio engine kept track of what key was playing, and generated bonus sounds to match? I wanted to demonstrate this approach, and so wrote a game for the Android platform called LandSeaAir that uses the FMOD Interactive Music System to do just that.

The FMOD Event API supports a variety of callbacks, which are messages sent by the audio engine to tell the program when certain conditions have been reached. The interactive score is comprised of cues, each consisting of one or more segments, which are containers holding one or more samples of music. Whenever a segment begins to play, a "segment start" callback containing an identifying number is issued by the audio engine, which is used to trigger code in the application.

Bonus sounds are then generated algorithmically based on whatever key is currently playing, as determined by the segment callback. An event containing a short musical note is played multiple times, repitched each time to fit the correct musical scale. This way, intervals and melodies can be produced that not only blend with the background music, but also conform to key changes even during modulations -- an effect standard sample technology cannot manage.

The only drawback to using this technique is that it breaks the strictly "data-driven" model, whereby interactive game audio is defined using FMOD Designer, and then rendered during gameplay by the FMOD Event system. In fact, to successully implement The Secret Yanni Techique, with key changes, the sound designer must work closely with the programmer, or better yet, you need an audio guy who can write code.

Three Levels

I wanted to show how this technique can be used in a variety of musical styles, so I wrote three levels, each utilizing different interactive audio techniques:

(Clicking on the level name will display a video of gameplay)

Air Level -- A bird chases bugs and butterflies: When the bird swallows a bug, one of ten melodies is generated, conformed to the key currently playing. When the bird eats a butterfly, the key of the music will change depending on its color (blue butterfly = C, green = E, red = Ab). After catching 6 bugs, the music changes from Major keys (blue sky background) to minor keys (nighttime).

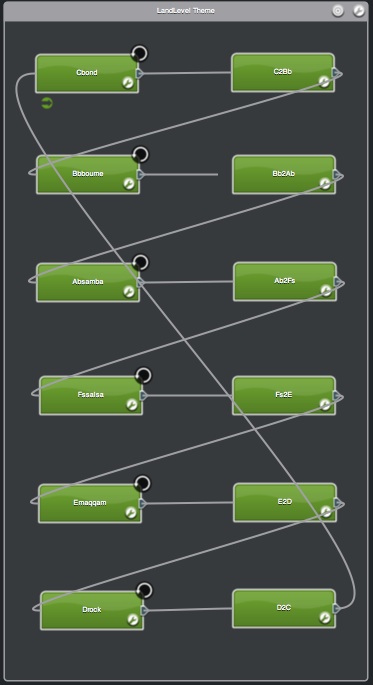

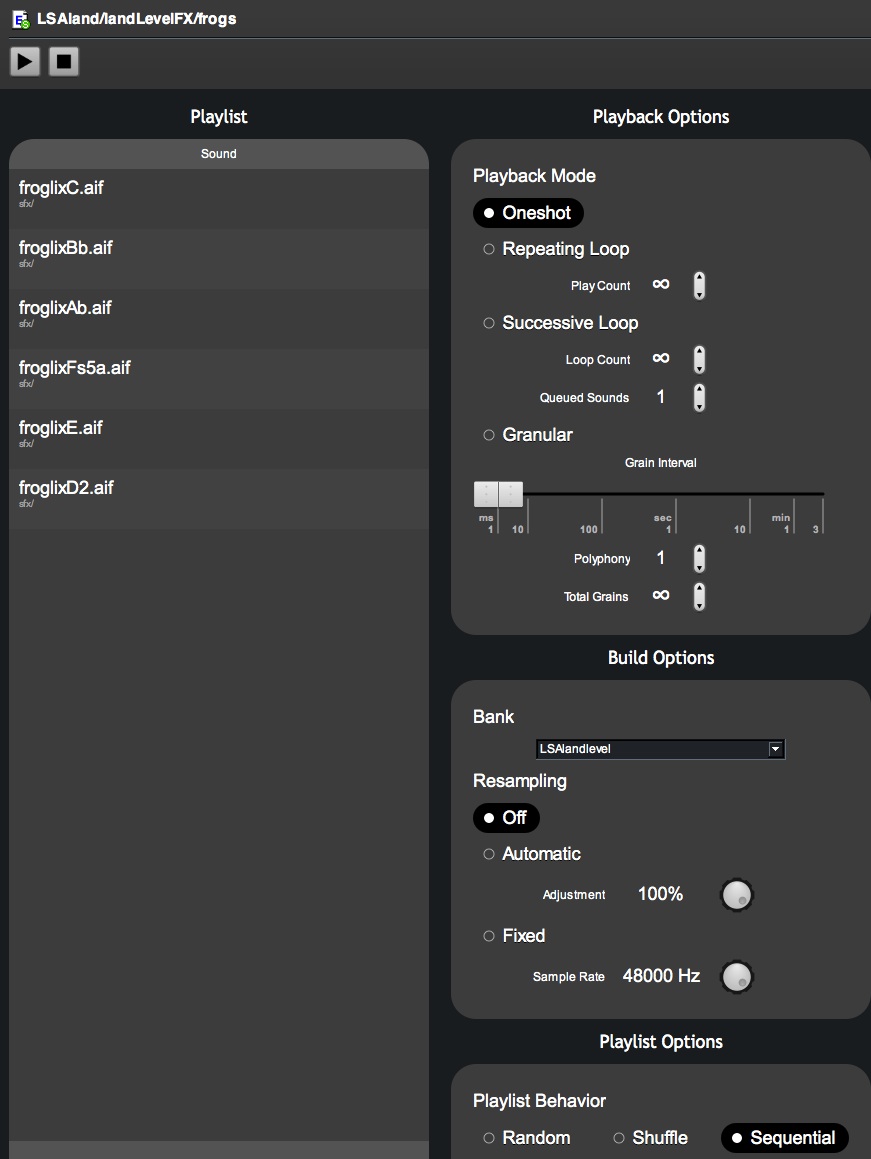

Land Level -- A snake chases rats and frogs: When the snake eats a rat, a consonant interval in the current key is played. When the snake catches a frog, a melodic phrase is played, then the music modulates down a whole step, cycling through 6 different terrains and musical styles: James Bond (C minor), Jason Bourne (Bb minor), Samba (Ab Major), Salsa (F# minor), Arabic (E maqam), and Rock (D minor).

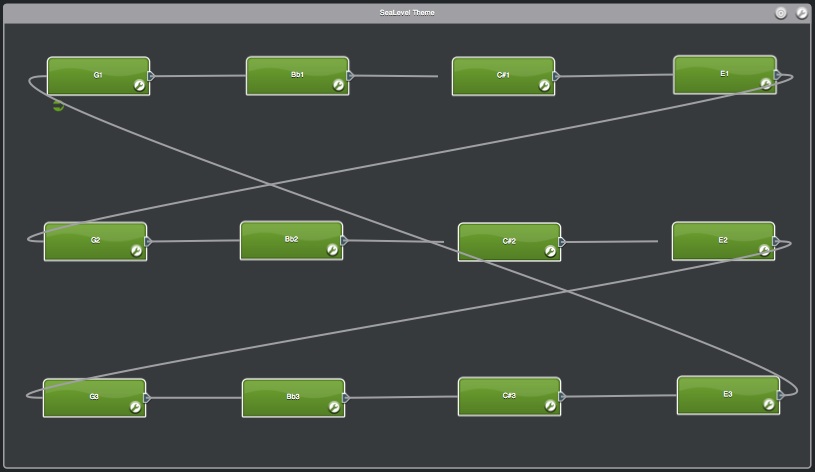

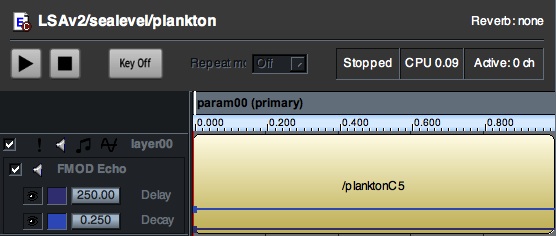

Sea Level -- A Manta ray eats plankton: The music loops through four minor keys, modulating up a minor third every 4 bars (||: G => Bb => C# => E :||) When a plankton is caught, a note from the current scale is generated using a fractal algorithm, which produces remarkably more melodic phrases than simply playing notes at random.

For each level, whenever the music modulates, a pop-up "toast" displays the new key.

Interactive Music Implementation

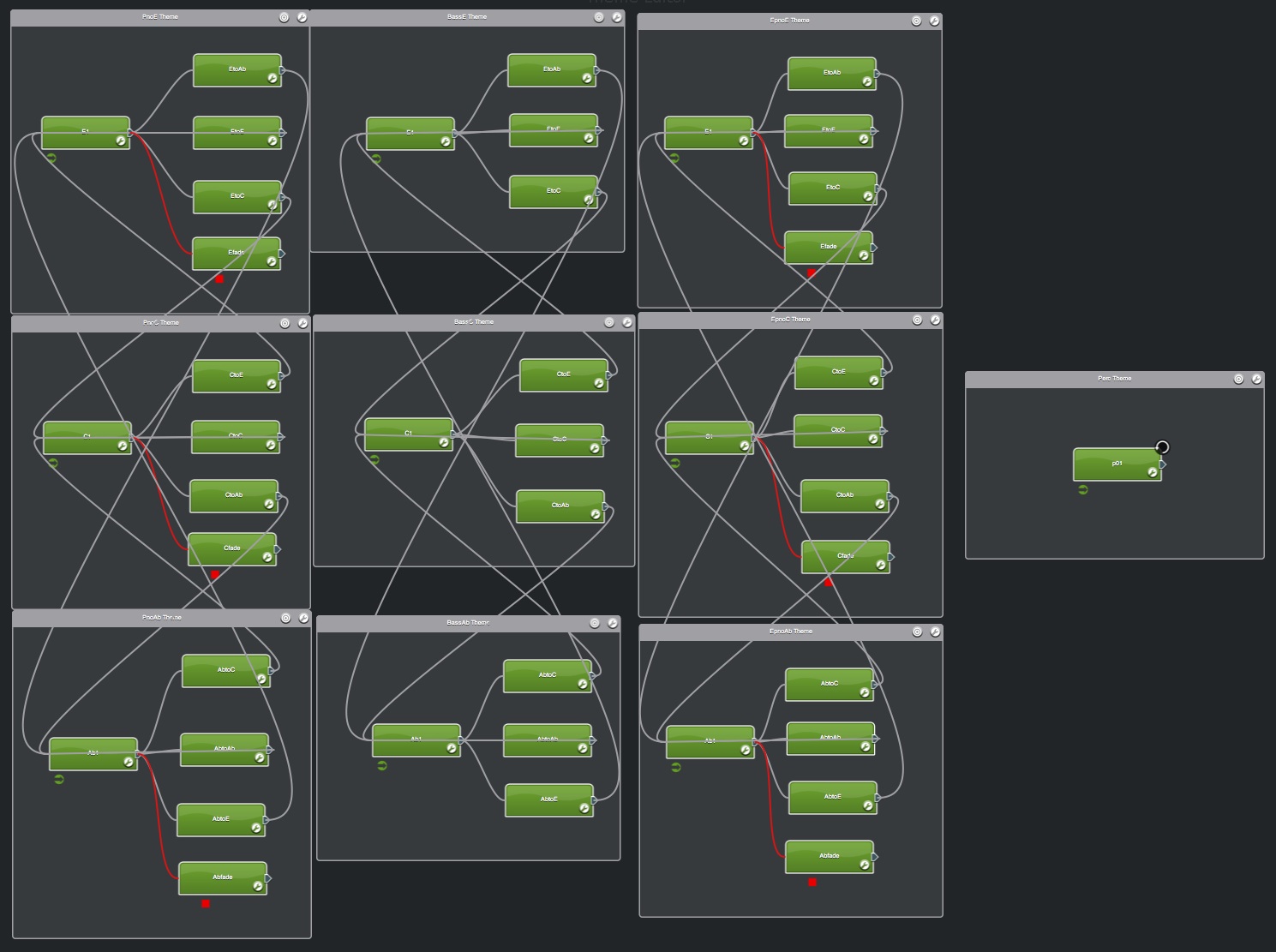

The Air Level FMOD Designer file is the most complicated implementation in the game, because it is the most interactive. Any key can modulate to any other, in three different instruments (bass, piano, and electric piano). Each phrase is divided into two bars of 6/8: A main start segment (indicated by the green circled arrow) and a transition segment that either loops back to the main segment, or modulates to a different key.

Which transition segment gets played is determined by the keyChange parameter, as controlled by the game code. For example, when a green butterfly is eaten, the keyChange parameter is set to 1, which causes the music to segue to the key of E. The numerous gray pathways determine the order in which segments are played. Red lines connect to a short fade-out segment, played when the instrument cue is stopped.

The bass instrument is designed to be modal, meaning it purposefully avoids playing the Major or minor third in any of the three keys. This allows the bass line to accompany both the Major key piano segments (played during "daytime") or the minor key electric piano chords (played under starry skies). The percussion segment plays through 12 drum loop samples.

The trickiest part of the Air Level implementation is that the cues must be started, and the keyChange parameter must be set, at the right time during the musical sequence. Otherwise, it is possible for the various segments can get out of sync, which creates a horrible discordance. Fortunately, I was able to use the "beat" callback in conjunction with the "segment start" callback to only start cues, and change the parameter, on the 3rd beat of a transition segment. This allows the bass line to play continuously throughout the level, while the piano and e.piano instruments can be toggled on and off at will.

The Land Level implementation is much simpler, consisting of six looping segments (indicated by the black circling arrows), with six corresponding transition segments, played sequentially. When a frog is eaten, the "modulate" parameter is set to 1, which causes the next transition segment to start, which in turn issues a callback that sets the parameter back to 0, so that the next looping segment will play until another frog is caught, and so on. It's like climbing down a ladder while pausing at each rung until the go-ahead signal is given.

The Sea Level implementation is simplest of all, with one cue containing 12 segments and no parameters. The same sound could have been produced using one long looping sample, but instead, the music is cut at 4-bar intervals, each containing phrases in a different key. This way, "segment start" callbacks indicate when the key changes, so bonus notes can be selected from the appropriate scale.

Bonus Notes

The sounds played by sprite collisions (i.e. bird eats bug) are simple FMOD events, played in various sequences, transposed by a number of semitones, as determined by algorithm and musical key. Although not part of the FMOD Interactive Music System (bonus notes are defined as sound effects), the game code controls playback timing and pitch to create the desired musical results.

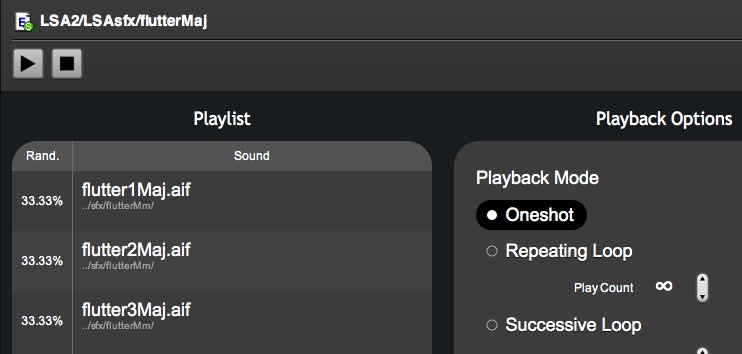

The Air Level bird "tweet" and Land Level rat "squeak" are the simplest events possible, providing basic "here's a sample, play it" functionality. The Air Level butterfly event is slightly more complex, in that there are two events (one for Major, one for minor) each of which contain three fluttery flute samples of chord inversions in C. When a butterfly is caught, the code determines which event to play (daytime for Major, nighttime for minor) and then one of the three samples is played at random, transposed to the current key as necessary. Thus, eating a butterfly will produce one of 18 different sounds, depending on its color, and when it was caught.

The Land Level frog "licks" event contains 6 samples, and triggers one in sequence each time the event is played. When the first frog is eaten, the first sample is played, which is a melodic sequence appropriate for the first terrain. In the next terrain, when the frog is eaten, the second sample is triggered, and so on.

The Sea Level plankton consists of a sample modified by the FMOD Echo effect. Setting the Delay and Decay rates to 250ms produces a pleasing sonar-like tone. If I had baked the effect into the sample, the echo would change each time the event was played at a different pitch (higher = faster, lower = slower). By using the FMOD effect, the ping-ng-ng-ng rate remains the same for every event, thus often syncing serendipitously with the tempo of the music, which happens to be 120 BPM (two beats per second).

FMOD Integration

As usual, once the sounds and interactivity are set in FMOD Designer, event (.fev) and soundbank (.fsb) files are exported to be integrated into the code. I did this the same way as my Vector Pinball game soundtrack, with a few modifications:

Generating Melodies

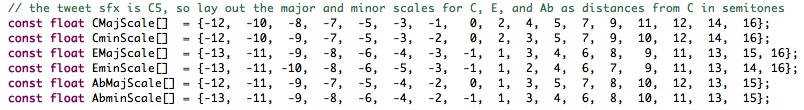

The bonus sound event for each level contains a single short note at a specific pitch, so musical scales can be defined in the code as an array of semitone offsets from that pitch. For example, in Air Level, the "tweet" event contains the note C5 (two octaves above middle C). So a C Major scale can be defined by a series of numbers representing notes as distances from C5 in half steps. Thus, 0 = C, 2 = D, 4 = E, 5 = F, and so on. Major and minor scales for all keys used in the level are defined this way.

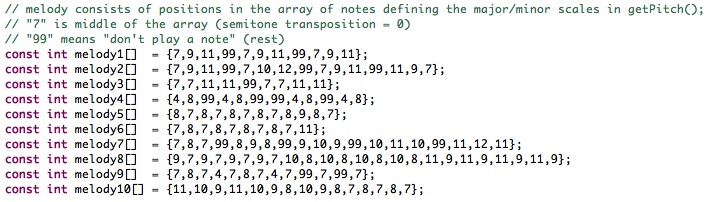

Melodies are defined as a series of indexes into the scale array. For example, melody1[] = {7, 9, 11, 99, 7, 9, 11, 99, 7, 9, 11}; this means "When playing the 1st bonus event, transpose the note by the number of semitones in the 7th place of the scale array for the current key. When playing the 2nd note, transpose by the semitone offset in the 9th place of the scale array. 3rd note = offset by 11th number in the array" and so on. "99" is code for "rest", meaning "don't play a note".

SO if the current key is C Major, melody1[] will play the notes "C, E, G, rest, C, E, G, rest, C, E, G" ... but if the key is C minor, the notes produced will be "C, Eb, G" etc, because the 9th element of CMinScale[] = 3 = Eb, whereas the 9th element of CMajScale[] = 4 = E. Using this method, melodies can be conformed to whatever key is playing, even during key modulations.

Timing Is Everything

All FMOD implementations must call EventSystem_Update in a continuous loop while the audio engine is running. This ensures that events play correctly, and callbacks are issued promptly. Since the function is called in the C level code every 75 milliseconds, I used it to perform double duty as the Air Level bonus melody tempo.

When a bug is eaten, the cPlayBonusSound function in airAudio.c sets the playingBonus flag, then loads one of ten melodies into the bonusMelody array. Subsequently, each time AirUpdate() is called from main.c, bonusSound() is called if the melody is active. On each iteration, the index for the melody array is incremented, and the number of semitones to transpose the C5 note in the "tweet" event is determined by the getPitch() function, which is where the scales are defined.

Various offset processing is performed as well. The root of the melodies need to be transposed up (for the key of E) or down (for the key of Ab) 4 semitones in order to maintain the desired tonality. For example, if the key is E Major, melody1[] should play "E, G#, B" (not "C#, E, G#"). The scales are diatonic, so simple arithmetic to shift the index up or down accomplishes this easily.

However, to ensure good voice leading, another flag (bonusStartKey) is used to indicate whether the key has changed while the melody is playing. If it has, then it sounds better to continue playing the melody in the original register, and conforming it to the new key. Otherwise, you get unpleasant melodic jumps that sound like errors, even though technically, the "right" notes are being played.

Persistence Of Harmony

The "right note sounds wrong" issue also cropped up in the Sea Level bonus sound generation. Four minor scales are defined as a series of semitone offsets as before, but instead of playing preset melodies, the amount to transpose the "sonar ping" sound is selected using a fractal algorithm (also known as 1/f or "pink" noise).

I first came across this method for computer generated melodies in Martin Gardner's Mathematical Games column titled "White, Brown, and Fractal Music" in Scientific American in 1978. In fact, this seminal article so influenced my interest in the field of interactive audio, I credit it with one of the reasons we are having this conversation. The algorithm itself is fairly simple, assigning notes by throwing dice:

In seaAudio.c, I used this algorithm to choose the semitone offset amount from the corresponding scale array. It worked like a charm, but I discovered an unexpected effect -- after a key change, if the first note played was not also available in the previous key, the note sounded "wrong", even though it was technically correct. For example, when the key changes from G minor to Bb minor, if the first note chosen by the fractal algorithm was Db, it sounded bad, despite being diatonic to the new key (but not the previous one).

I called this the Persistence of Harmony effect, because apparently, it takes a moment for your ears to become accustomed to the new tonality. To address the problem, I test the first note after a key change to see if it was in the previous key, and if not, I change it to one that was, using a simple transformation matrix.

If This Then Do That

At first, I tried using complex array comparisons to choose the nearest available note, until realizing that the "if this then do that" method was not only easier to implement, it generated more musical phrases.

The "simpler is better" method was also useful in Land Level for playing bonus sounds when a rat is eaten by the snake. Rather than attempting complicated math to determine consonant diatonic intervals in semitones, I simply pick a note from the scale at random, then assign the second note using a switch statement. Not only did this avoid tricky edge case processing, it gave me better control over the intervals to be played.

Conclusion

The rest of the code is fairly standard Android game stuff: looping thread, sensor processing, animation sprites, memory allocations, XML layouts, etc. However, there was one audio trick that did transfer over to the graphics processing. A simple way to create seamless looping background ambiences (i.e. wind in the trees) is to:

Be that as it may, in this blog, I discuss audio techniques and technologies, because I'm primarily an audio guy. But since I've also been programming computers of one sort or another since the 1980's, it made more sense to write a game to demonstrate The Secret Yanni Technique with key changes, rather than just describe it -- because "talking about music is like smelling a painting™".

<shameless_plug> Of course, I'm also hoping that some clever and resourceful employer or client might think that an "audio guy who can code" would be a valuable person to have on their team. I can be reached for contracts or job offers at my studio address </shameless_plug>.

If you're interested in the app, the Android code / Eclipse project, or the FMOD Designer files, they can all be downloaded from the FMOD for Android page on my Twittering Machine website.

The game can also be downloaded and installed from LandSeaAir on Google Play.

- pdx

=========================

(footnote)

Why do I call it The Secret Yanni Technique? Many years ago, I described the method to my older brother, the big shot Hollywood music editor. When I said the trick was to write background music that stayed in a single mode, he chuckled disdainfully and said, "Oh, you mean, like Yanni!" Now, I'm not sure if he was putting down Yanni's new age music or mine (or both), but I laughed and said, "Well, yeah, but don't tell anybody!" And thus a nickname was born ...

Ironically, background music in that kind of sonic blanket, minimal harmonic movement, consistently consonant style can work well for both game levels and movie soundtracks -- and for the same reason. It is music designed to be "heard, but not listened to", intending to evoke a mood or emotion without attracting attention to itself.

Actually, I can trace my interest in "single mode music" back to childhood, because my mother was a teacher of the Orff Schulwerk, a music pedagogy for elementary school children, designed by Carl Orff (you know, the guy who wrote Carmina Burana, which every sturm-und-drang, full orchestra and choir, game composer tries to emulate for end of the world combat scenes). Orff's Approach relied on simple rhythms and mallet instruments tuned to pentatonic scales, so that students could basically hit any note at any time, and never sound very "wrong" (unlike banging at random on a piano keyboard).

Pentatonic scales produce a euphonious effect, which can encourage kids to learn about rhythm and harmony. However, that "any note at any time sounds OK" sonority is exactly what you want when creating musical sound effects, since in a game level, you can never predict when any sound will be played. Using only the first, second, third, fifth, and sixth notes of the scale provides a simple mechanism for generating the desired result.

Of course, this is no coincidence or clever contrivance. Pentatonic scales are hard-wired into the human brain, as aptly demonstrated by Bobby McFerrin at the World Science Festival in June 2009. This is because the frequencies of pentatonic notes are related to each other by low number ratios (2 to 3, 3 to 5, etc), and our ears have evolved specifically to identify those intervals as "good" (as opposed to high number ratios, like 45 to 32 for a tritone, which sounds "bad"). Or to put it another way: those that could hear and sing the "good" intervals (aka "had musical talent") demonstrated strong brains (and thus good genes), which made them more attractive mates, who had more offspring with that ability.

Pentatonic scales are evidence of evolution in action ...

Bonus sounds are then generated algorithmically based on whatever key is currently playing, as determined by the segment callback. An event containing a short musical note is played multiple times, repitched each time to fit the correct musical scale. This way, intervals and melodies can be produced that not only blend with the background music, but also conform to key changes even during modulations -- an effect standard sample technology cannot manage.

The only drawback to using this technique is that it breaks the strictly "data-driven" model, whereby interactive game audio is defined using FMOD Designer, and then rendered during gameplay by the FMOD Event system. In fact, to successully implement The Secret Yanni Techique, with key changes, the sound designer must work closely with the programmer, or better yet, you need an audio guy who can write code.

Three Levels

I wanted to show how this technique can be used in a variety of musical styles, so I wrote three levels, each utilizing different interactive audio techniques:

(Clicking on the level name will display a video of gameplay)

Air Level -- A bird chases bugs and butterflies: When the bird swallows a bug, one of ten melodies is generated, conformed to the key currently playing. When the bird eats a butterfly, the key of the music will change depending on its color (blue butterfly = C, green = E, red = Ab). After catching 6 bugs, the music changes from Major keys (blue sky background) to minor keys (nighttime).

Land Level -- A snake chases rats and frogs: When the snake eats a rat, a consonant interval in the current key is played. When the snake catches a frog, a melodic phrase is played, then the music modulates down a whole step, cycling through 6 different terrains and musical styles: James Bond (C minor), Jason Bourne (Bb minor), Samba (Ab Major), Salsa (F# minor), Arabic (E maqam), and Rock (D minor).

Sea Level -- A Manta ray eats plankton: The music loops through four minor keys, modulating up a minor third every 4 bars (||: G => Bb => C# => E :||) When a plankton is caught, a note from the current scale is generated using a fractal algorithm, which produces remarkably more melodic phrases than simply playing notes at random.

For each level, whenever the music modulates, a pop-up "toast" displays the new key.

Interactive Music Implementation

The Air Level FMOD Designer file is the most complicated implementation in the game, because it is the most interactive. Any key can modulate to any other, in three different instruments (bass, piano, and electric piano). Each phrase is divided into two bars of 6/8: A main start segment (indicated by the green circled arrow) and a transition segment that either loops back to the main segment, or modulates to a different key.

Which transition segment gets played is determined by the keyChange parameter, as controlled by the game code. For example, when a green butterfly is eaten, the keyChange parameter is set to 1, which causes the music to segue to the key of E. The numerous gray pathways determine the order in which segments are played. Red lines connect to a short fade-out segment, played when the instrument cue is stopped.

The bass instrument is designed to be modal, meaning it purposefully avoids playing the Major or minor third in any of the three keys. This allows the bass line to accompany both the Major key piano segments (played during "daytime") or the minor key electric piano chords (played under starry skies). The percussion segment plays through 12 drum loop samples.

The trickiest part of the Air Level implementation is that the cues must be started, and the keyChange parameter must be set, at the right time during the musical sequence. Otherwise, it is possible for the various segments can get out of sync, which creates a horrible discordance. Fortunately, I was able to use the "beat" callback in conjunction with the "segment start" callback to only start cues, and change the parameter, on the 3rd beat of a transition segment. This allows the bass line to play continuously throughout the level, while the piano and e.piano instruments can be toggled on and off at will.

The Land Level implementation is much simpler, consisting of six looping segments (indicated by the black circling arrows), with six corresponding transition segments, played sequentially. When a frog is eaten, the "modulate" parameter is set to 1, which causes the next transition segment to start, which in turn issues a callback that sets the parameter back to 0, so that the next looping segment will play until another frog is caught, and so on. It's like climbing down a ladder while pausing at each rung until the go-ahead signal is given.

The Sea Level implementation is simplest of all, with one cue containing 12 segments and no parameters. The same sound could have been produced using one long looping sample, but instead, the music is cut at 4-bar intervals, each containing phrases in a different key. This way, "segment start" callbacks indicate when the key changes, so bonus notes can be selected from the appropriate scale.

Bonus Notes

The sounds played by sprite collisions (i.e. bird eats bug) are simple FMOD events, played in various sequences, transposed by a number of semitones, as determined by algorithm and musical key. Although not part of the FMOD Interactive Music System (bonus notes are defined as sound effects), the game code controls playback timing and pitch to create the desired musical results.

The Air Level bird "tweet" and Land Level rat "squeak" are the simplest events possible, providing basic "here's a sample, play it" functionality. The Air Level butterfly event is slightly more complex, in that there are two events (one for Major, one for minor) each of which contain three fluttery flute samples of chord inversions in C. When a butterfly is caught, the code determines which event to play (daytime for Major, nighttime for minor) and then one of the three samples is played at random, transposed to the current key as necessary. Thus, eating a butterfly will produce one of 18 different sounds, depending on its color, and when it was caught.

The Land Level frog "licks" event contains 6 samples, and triggers one in sequence each time the event is played. When the first frog is eaten, the first sample is played, which is a melodic sequence appropriate for the first terrain. In the next terrain, when the frog is eaten, the second sample is triggered, and so on.

The Sea Level plankton consists of a sample modified by the FMOD Echo effect. Setting the Delay and Decay rates to 250ms produces a pleasing sonar-like tone. If I had baked the effect into the sample, the echo would change each time the event was played at a different pitch (higher = faster, lower = slower). By using the FMOD effect, the ping-ng-ng-ng rate remains the same for every event, thus often syncing serendipitously with the tempo of the music, which happens to be 120 BPM (two beats per second).

FMOD Integration

As usual, once the sounds and interactivity are set in FMOD Designer, event (.fev) and soundbank (.fsb) files are exported to be integrated into the code. I did this the same way as my Vector Pinball game soundtrack, with a few modifications:

- The soundbank files are fairly high resolution, and thus somewhat large. Rather than copy them out of the .apk file into internal memory the first time the game is played, I store them instead on the external SD card, and set the mediaPath to point to the "sdcard/fmod" folder. The only drawback is that when the user deletes the app, the "fmod" folder remains on the SD card and must be removed by hand <shrug>.

- Each level has its own .fev and .fsb file. To be memory efficient, the FMOD engine is initialized when the menu is first displayed, then the necessary event files are loaded and unloaded for each level. When the level is initialized, the music files must be expanded and read into RAM to prevent hiccuping during playback. This produces a pause before gameplay starts, but since only the files needed for that specific level are processed, the delay is as short as possible.

- Calls to the FMOD API are written in C, and all the code required to perform the audio processing is located in four files: main.c which allocates memory and initializes the Event / Music systems, and airAudio.c, landAudio.c, and seaAudio.c, which contain the audio functions for each level. Most of those functions are called from FMODaudio.java, via the Java Native Interface (JNI).

Generating Melodies

The bonus sound event for each level contains a single short note at a specific pitch, so musical scales can be defined in the code as an array of semitone offsets from that pitch. For example, in Air Level, the "tweet" event contains the note C5 (two octaves above middle C). So a C Major scale can be defined by a series of numbers representing notes as distances from C5 in half steps. Thus, 0 = C, 2 = D, 4 = E, 5 = F, and so on. Major and minor scales for all keys used in the level are defined this way.

Melodies are defined as a series of indexes into the scale array. For example, melody1[] = {7, 9, 11, 99, 7, 9, 11, 99, 7, 9, 11}; this means "When playing the 1st bonus event, transpose the note by the number of semitones in the 7th place of the scale array for the current key. When playing the 2nd note, transpose by the semitone offset in the 9th place of the scale array. 3rd note = offset by 11th number in the array" and so on. "99" is code for "rest", meaning "don't play a note".

SO if the current key is C Major, melody1[] will play the notes "C, E, G, rest, C, E, G, rest, C, E, G" ... but if the key is C minor, the notes produced will be "C, Eb, G" etc, because the 9th element of CMinScale[] = 3 = Eb, whereas the 9th element of CMajScale[] = 4 = E. Using this method, melodies can be conformed to whatever key is playing, even during key modulations.

Timing Is Everything

All FMOD implementations must call EventSystem_Update in a continuous loop while the audio engine is running. This ensures that events play correctly, and callbacks are issued promptly. Since the function is called in the C level code every 75 milliseconds, I used it to perform double duty as the Air Level bonus melody tempo.

When a bug is eaten, the cPlayBonusSound function in airAudio.c sets the playingBonus flag, then loads one of ten melodies into the bonusMelody array. Subsequently, each time AirUpdate() is called from main.c, bonusSound() is called if the melody is active. On each iteration, the index for the melody array is incremented, and the number of semitones to transpose the C5 note in the "tweet" event is determined by the getPitch() function, which is where the scales are defined.

Various offset processing is performed as well. The root of the melodies need to be transposed up (for the key of E) or down (for the key of Ab) 4 semitones in order to maintain the desired tonality. For example, if the key is E Major, melody1[] should play "E, G#, B" (not "C#, E, G#"). The scales are diatonic, so simple arithmetic to shift the index up or down accomplishes this easily.

However, to ensure good voice leading, another flag (bonusStartKey) is used to indicate whether the key has changed while the melody is playing. If it has, then it sounds better to continue playing the melody in the original register, and conforming it to the new key. Otherwise, you get unpleasant melodic jumps that sound like errors, even though technically, the "right" notes are being played.

Persistence Of Harmony

The "right note sounds wrong" issue also cropped up in the Sea Level bonus sound generation. Four minor scales are defined as a series of semitone offsets as before, but instead of playing preset melodies, the amount to transpose the "sonar ping" sound is selected using a fractal algorithm (also known as 1/f or "pink" noise).

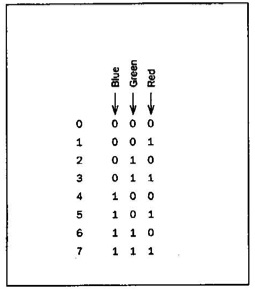

I first came across this method for computer generated melodies in Martin Gardner's Mathematical Games column titled "White, Brown, and Fractal Music" in Scientific American in 1978. In fact, this seminal article so influenced my interest in the field of interactive audio, I credit it with one of the reasons we are having this conversation. The algorithm itself is fairly simple, assigning notes by throwing dice:

"The method is best explained by considering a sequence of eight notes chosen from a scale of 16 tones. We use three dice of three colors: red, green, and blue. Their possible sums range from 3 to 18. Select 16 adjacent notes on a piano, and number them 3 through 18."

"The first note of our tune is obtained by tossing all three dice and picking the tone that corresponds to the sum. Note that in going from 000 to 001 only the red digit changes. Leave the green and blue dice undisturbed, still showing the numbers of the previous toss. The new sum of all three dice gives the second note of your tune. In the next transition, from 001 to 010, both the red and green digits change. Pick up the red and green dice, leaving the blue one undisturbed, and toss the pair. The sum of all three dice gives the third tone. The fourth note is found by shaking only the red die, the fifth by shaking all three. The procedure, in short, is to shake only those dice that correspond to digit changes."

In seaAudio.c, I used this algorithm to choose the semitone offset amount from the corresponding scale array. It worked like a charm, but I discovered an unexpected effect -- after a key change, if the first note played was not also available in the previous key, the note sounded "wrong", even though it was technically correct. For example, when the key changes from G minor to Bb minor, if the first note chosen by the fractal algorithm was Db, it sounded bad, despite being diatonic to the new key (but not the previous one).

I called this the Persistence of Harmony effect, because apparently, it takes a moment for your ears to become accustomed to the new tonality. To address the problem, I test the first note after a key change to see if it was in the previous key, and if not, I change it to one that was, using a simple transformation matrix.

If This Then Do That

At first, I tried using complex array comparisons to choose the nearest available note, until realizing that the "if this then do that" method was not only easier to implement, it generated more musical phrases.

The "simpler is better" method was also useful in Land Level for playing bonus sounds when a rat is eaten by the snake. Rather than attempting complicated math to determine consonant diatonic intervals in semitones, I simply pick a note from the scale at random, then assign the second note using a switch statement. Not only did this avoid tricky edge case processing, it gave me better control over the intervals to be played.

Conclusion

The rest of the code is fairly standard Android game stuff: looping thread, sensor processing, animation sprites, memory allocations, XML layouts, etc. However, there was one audio trick that did transfer over to the graphics processing. A simple way to create seamless looping background ambiences (i.e. wind in the trees) is to:

- cut the sample in half

- flip the pieces

- crossfade the splice

Be that as it may, in this blog, I discuss audio techniques and technologies, because I'm primarily an audio guy. But since I've also been programming computers of one sort or another since the 1980's, it made more sense to write a game to demonstrate The Secret Yanni Technique with key changes, rather than just describe it -- because "talking about music is like smelling a painting™".

<shameless_plug> Of course, I'm also hoping that some clever and resourceful employer or client might think that an "audio guy who can code" would be a valuable person to have on their team. I can be reached for contracts or job offers at my studio address </shameless_plug>.

If you're interested in the app, the Android code / Eclipse project, or the FMOD Designer files, they can all be downloaded from the FMOD for Android page on my Twittering Machine website.

The game can also be downloaded and installed from LandSeaAir on Google Play.

- pdx

=========================

(footnote)

Why do I call it The Secret Yanni Technique? Many years ago, I described the method to my older brother, the big shot Hollywood music editor. When I said the trick was to write background music that stayed in a single mode, he chuckled disdainfully and said, "Oh, you mean, like Yanni!" Now, I'm not sure if he was putting down Yanni's new age music or mine (or both), but I laughed and said, "Well, yeah, but don't tell anybody!" And thus a nickname was born ...

Ironically, background music in that kind of sonic blanket, minimal harmonic movement, consistently consonant style can work well for both game levels and movie soundtracks -- and for the same reason. It is music designed to be "heard, but not listened to", intending to evoke a mood or emotion without attracting attention to itself.

Actually, I can trace my interest in "single mode music" back to childhood, because my mother was a teacher of the Orff Schulwerk, a music pedagogy for elementary school children, designed by Carl Orff (you know, the guy who wrote Carmina Burana, which every sturm-und-drang, full orchestra and choir, game composer tries to emulate for end of the world combat scenes). Orff's Approach relied on simple rhythms and mallet instruments tuned to pentatonic scales, so that students could basically hit any note at any time, and never sound very "wrong" (unlike banging at random on a piano keyboard).

Pentatonic scales produce a euphonious effect, which can encourage kids to learn about rhythm and harmony. However, that "any note at any time sounds OK" sonority is exactly what you want when creating musical sound effects, since in a game level, you can never predict when any sound will be played. Using only the first, second, third, fifth, and sixth notes of the scale provides a simple mechanism for generating the desired result.

Of course, this is no coincidence or clever contrivance. Pentatonic scales are hard-wired into the human brain, as aptly demonstrated by Bobby McFerrin at the World Science Festival in June 2009. This is because the frequencies of pentatonic notes are related to each other by low number ratios (2 to 3, 3 to 5, etc), and our ears have evolved specifically to identify those intervals as "good" (as opposed to high number ratios, like 45 to 32 for a tritone, which sounds "bad"). Or to put it another way: those that could hear and sing the "good" intervals (aka "had musical talent") demonstrated strong brains (and thus good genes), which made them more attractive mates, who had more offspring with that ability.

Pentatonic scales are evidence of evolution in action ...

Print

Print Listen

Listen By

By